The idea behind the selection sort is straightforward: at each iteration the unsorted element with the smallest (or largest) value is moved to its proper position in the array. Assume that we wish to sort an array in increasing order. We begin by selecting the lowest element and moving it to the lowest index position. We can do this by swapping the element at the lowest index and the lowest element. We then reduce the effective size of the unsorted items by one element and repeat the process on the smaller unsorted (sub)array. The process stops when the effective number of the unsorted items becomes 1.

Let's see a Python implementation of the selection sort which is able to visualize with a graph the status of the sorting at each iteration:

import pylab

def selectionsort_anim(a):

x = range(len(a))

for j in range(len(a)-1):

iMin = j

for i in range(j+1,len(a)):

if a[i] < a[iMin]: # find the smallest value

iMin = i

if iMin != j: # place the value into its proper location

a[iMin], a[j] = a[j], a[iMin]

# plotting

pylab.plot(x,a,'k.',markersize=6)

pylab.savefig("selectionsort/img" + '%04d' % j + ".png")

pylab.clf() # figure clear

# running the algorithm

a = range(300) # initialization of the array

shuffle(a) # shuffle!

selectionsort_anim(a) # sorting

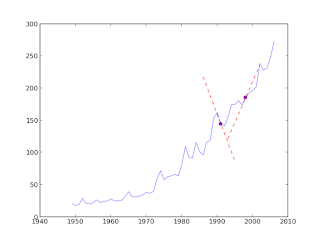

At each iteration the status of the algorithm is visualized plotting the indexes of the array versus its values. Every plot is saved as an image and we can easily join them as a video using ffmpeg:

$ cd selectionsort # the directory where the images are $ ffmpeg -qscale 5 -r 20 -b 9600 -i img%04d.png movie.mp4The result should be as follows

This is just the first of a series of posts about the visualization of sorting algorithms. Stay tuned for the animations of other sorting algorithms!